Hello,

I’m having issue wile uploading large backup on Amazon S3. It seems that Amazon has upper limit on number of file parts. Limit is set to 10k.

As I understand the solution is simple - increase the size of single package so the total number of packages will decreasee below 10k. How to do that using SqlBackupAndFTP? I’ve got v10.1.15

Thank you

Logs from SQLBackupAndFTP:

| 11/24/2019 00:53:00 |

WARNING: Failed to upload part #10001; Position: 167772160000; Size: 16777216 > Part number must be an integer between 1 and 10000, inclusive > The remote server returned an error: (400) Bad Request. > The remote server returned an error: (400) Bad Request. |

| 11/24/2019 00:53:00 |

Trying to upload part #10001 again… |

| 11/24/2019 00:53:00 |

WARNING: Failed to upload part #10002; Position: 167788937216; Size: 16777216 > Part number must be an integer between 1 and 10000, inclusive > The remote server returned an error: (400) Bad Request. > The remote server returned an error: (400) Bad Request. |

| 11/24/2019 00:53:00 |

Trying to upload part #10002 again… |

| 11/24/2019 00:53:01 |

WARNING: Failed to upload part #10001; Position: 167772160000; Size: 16777216 > Part number must be an integer between 1 and 10000, inclusive > The remote server returned an error: (400) Bad Request. > The remote server returned an error: (400) Bad Request. |

Hi jankowicz,

Thank you for the details.

SQLBackupAndFTP version 10 is a very old version and no longer supported.

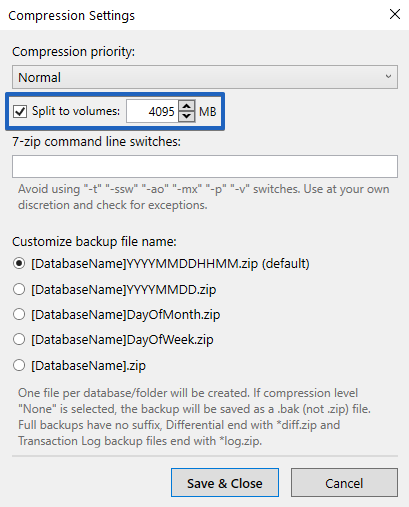

With SQLBackupAndFTP version 12 you can the option which allows you to split your backup files into volumes at the “Compression Settings” window.

Please let us know if there is anything else we can help you with.

Thank you for your response.

I understand. But in reference to the new app version - why SQLBackupAndFTP cannot just let me increase package size as other S3 clients do? Amazon can accept packages of size up to few GB as I remember.

Hi jankowicz,

Thank you for the details, please give us some time to check the issue.

Hi jankowicz,

Sorry, but the information we have doesn’t enough to investigate this case. Could you please send us your Advanced log? Here are more details on how to do it https://sqlbackupandftp.com/blog/how-to-send-log-to-developers

Please let us know when the log will be sent? Also, please provide us with your Application ID (“Help” > “About”).

Sorry for the inconvenience.

Sorry, it’s on production, no experiments allowed, I gave you all data I had. I’ve bought newest version of your app. Issue was not fixed but we workaround it using functionality you’ve mentioned “Split to volumes”.

If you’re interested in fixing this bug please find additional information below.

You can take 170GB file and try to upload it on Amazon S3 as a single file by yourself. It seems like your app has hardcoded max package size = 16777216 bytes which multiplied by 10k packages gives us “exactly” 160GB.

Amazon stays it can accept package up to 10GB and package counter up to 10k. The issue is caused by SQLBckupAndFTP which has exceeded max package number. It could be avoided by increasing size of a single package which will decrease total number of packages (like other S3 clients do).

It started from here:

| 11/21/2019 15:14:52 |

Compression completed (archive: “abc_FileStore201911211215.7z”, size: 164,028,390 KB) |

| 11/21/2019 15:14:52 |

Connecting to E:\abc\Backups |

| 11/21/2019 15:14:53 |

Uploading abc_FileStore201911211215.7z to E:\abc\Backups (abc_FileStore201911211215.7z) |

| 11/21/2019 15:27:38 |

Remote file size has been successfully verified |

| 11/21/2019 15:27:38 |

Disconnecting from E:\abc\Backups |

| 11/21/2019 15:27:38 |

Connecting to S3: xyz-abc: backups |

| 11/21/2019 15:27:44 |

Uploading abc_FileStore201911211215.7z to S3: xyz-abc: backups (abc_FileStore201911211215.7z) |

| 11/22/2019 01:16:36 |

WARNING: Failed to upload part #10001; Position: 167772160000; Size: 16777216 > Part number must be an integer between 1 and 10000, inclusive > The remote server returned an error: (400) Bad Request. > The remote server returned an error: (400) Bad Request. |

And repeated each single day. The Part Number and Part Size did not change, only the database size was increasing (due to normaln production work).

| 11/23/2019 14:52:29 |

Compression completed (archive: “abc_FileStore201911231215.7z”, size: 164,735,479 KB) |

| 11/23/2019 14:52:29 |

Connecting to E:\abc\Backups |

| 11/23/2019 14:52:29 |

Uploading abc_FileStore201911231215.7z to E:\abc\Backups (abc_FileStore201911231215.7z) |

| 11/23/2019 15:03:35 |

Remote file size has been successfully verified |

| 11/23/2019 15:03:35 |

Disconnecting from E:\abc\Backups |

| 11/23/2019 15:03:35 |

Connecting to S3: xyz-abc: backups |

| 11/23/2019 15:03:42 |

Uploading abc_FileStore201911231215.7z to S3: xyz-abc: backups (abc_FileStore201911231215.7z) |

| 11/24/2019 00:53:00 |

WARNING: Failed to upload part #10001; Position: 167772160000; Size: 16777216 > Part number must be an integer between 1 and 10000, inclusive > The remote server returned an error: (400) Bad Request. > The remote server returned an error: (400) Bad Request. |

Hi jankowicz,

Thank you for the details. Yes, that information is enough, please give us some time to check the issue.

Sorry for the inconvenience.

Hi jankowicz,

Thank you for waiting. We have fixed the issue in the Alpha edition http://sqlbackupandftp.com/download/alpha Could you please install it and test if it works?

Sorry for the inconvenience.

Thank you. How does it work? Application automatically increases package size to avoid package counter limit? Or mayby I have to set max package size manually?

Hi jankowicz,

It works automatically. By default, the packet’s size is 16 MB. But if, the size of the downloaded file is more than 16MB * 10000 - then the size of the sent packet is calculated as filzesize / 9999 + 1 MB.